Vector Databases and LLMs – Let the Show Begin!

If you've already set up a vector database—I've previously covered the basics on this blog—you know how powerful they can be for semantic searches, document retrieval, clustering related content, and much more. But trust me, the real excitement kicks in when you combine this database with a Large Language Model (LLM).

Here's a quick example: I've recently vectorized the European Union's proposed budget for 2025. Imagine around 20 MB of dense legal and financial jargon—the type of document you certainly wouldn't pick up for casual evening reading. Moreover, if you stick to traditional methods, simply running semantic similarity queries against your vector DB, results might often be disappointing.

Enter GPT-4.1 and the Function-Based Approach

Instead of the classic trial-and-error database querying, let's bring GPT-4.1 into play. Specifically, we'll create a function named eu_get within the LLM context. This function takes two parameters:

-

text: The query or statement you're looking for in the vector DB.

-

limit: The maximum number of similar vector chunks to retrieve.

Here's the function schema definition in JSON:

{

"name": "get_eu",

"description": "Retrieve fields of JSON data from external vector DB",

"strict": true,

"parameters": {

"type": "object",

"required": ["text", "limit"],

"properties": {

"text": { "type": "string", "description": "The input text to retrieve data" },

"limit": { "type": "string", "description": "Number of similar chunks to retrieve, dynamically determined by the LLM." }

},

"additionalProperties": false

}

}Python Implementation of the Function

The Python API endpoint to handle this logic might look like this:

@app.post("/eu")

async def eu(request: Request):

data = await request.json()

limit_str = data.get("limit")

limit = int(limit_str) if limit_str and limit_str.isdigit() else 5

text = data.get("text")

conn = psycopg2.connect(

dbname="eu_embeddings",

user="postgres",

password="...",

host="localhost",

port="5437"

)

cursor = conn.cursor()

emb = get_embedding2(client, normalize_text(text), "text-embedding-3-small")

embedding_str = np.array(emb).tolist()

query_str = f"""SELECT id, file, page, position, text_chunk,

(embedding <#> '{embedding_str}'::vector) as similarity_score

FROM embeddings

ORDER BY embedding <#> '{embedding_str}'::vector ASC

LIMIT {limit}"""

cursor.execute(query_str)

columns = [desc[0] for desc in cursor.description]

top_docs = cursor.fetchall()

rows = [dict(zip(columns, row)) for row in top_docs]

cursor.close()

conn.close()

return json.dumps(rows, ensure_ascii=False, indent=4)Smart Queries with GPT-4.1

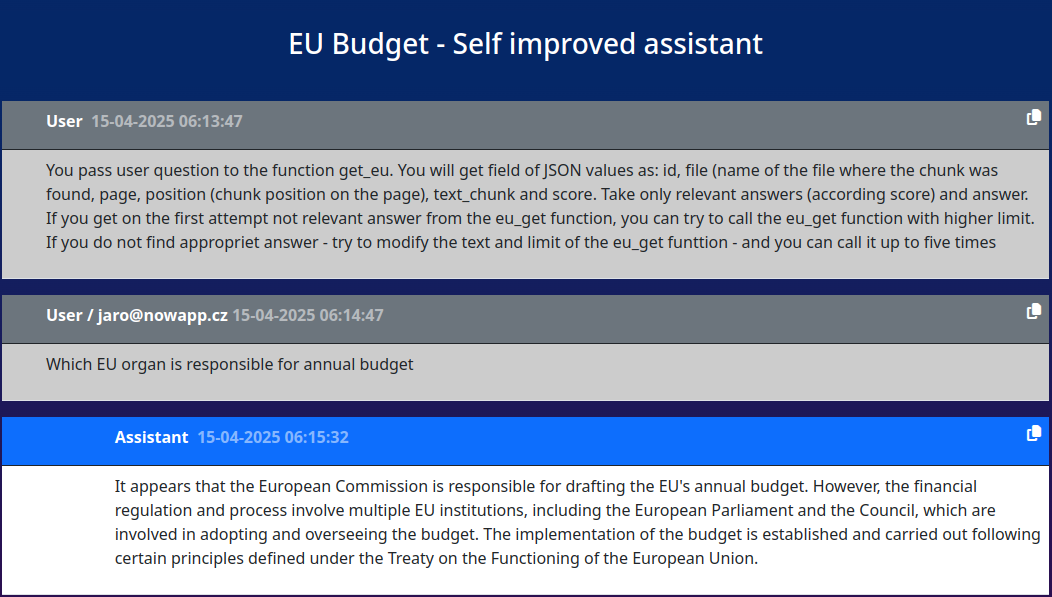

Here's where the innovation kicks in: each GPT-4.1 thread begins with clear instructions:

"You pass the user's question to the get_eu function. You'll get JSON fields: id, file, page, position, text_chunk, and score. Only use relevant answers (based on score). If the initial response is unsatisfactory, retry with a higher limit or modified query up to five times."

Real-World Results

Here's a fascinating example of GPT-4.1 adapting queries dynamically - it makes 6 slightly different questions:

-

Limit: 3 | Query: EU organ responsible for annual budget

-

Limit: 5 | Query: EU body responsible for annual budget preparation

-

Limit: 8 | Query: EU body responsible for annual budget

-

Limit: 10 | Query: EU organ responsible for preparing annual budget

-

Limit: 5 | Query: rights of EU member state governments to influence final EU budget

-

Limit: 10 | Query: member states role in EU budget adoption

In total, GPT-4.1 intelligently combined and reviewed 41 text chunks, progressively refining queries based on the initial results. Such flexibility opens immense possibilities for intelligently handling complex data searches, significantly enhancing both accuracy and effectiveness in information retrieval.

Exciting times ahead!